Table of Contents

- Intro

- Using

- Design

- Testing

- Regression

- Performance

- Hash-Based

- Branch-Based

- Multimap

-

Text

findwith Small Secondary-to-Primary Key Ratios -

Text

findwith Large Secondary-to-Primary Key Ratios -

Text

insertwith Small Secondary-to-Primary Key Ratios -

Text

insertwith Small Secondary-to-Primary Key Ratios -

Text

insertwith Small Secondary-to-Primary Key Ratios Memory Use -

Text

insertwith Small Secondary-to-Primary Key Ratios Memory Use

-

Text

- Priority Queue

- Observations

- Acknowledgments

- Bibliography

This is a library of policy-based elementary data structures:

associative containers and priority queues. It is designed for

high-performance, flexibility, semantic safety, and conformance to

the corresponding containers in std and

std::tr1 (except for some points where it differs

by design).

An attempt is made to categorize the wide variety of possible container designs in terms of performance-impacting factors. These performance factors are translated into design policies and incorporated into container design.

There is tension between unravelling factors into a coherent set of policies. Every attempt is made to make a minimal set of factors. However, in many cases multiple factors make for long template names. Every attempt is made to alias and use typedefs in the source files, but the generated names for external symbols can be large for binary files or debuggers.

In many cases, the longer names allow capabilities and behaviours controlled by macros to also be unamibiguously emitted as distinct generated names.

Specific issues found while unraveling performance factors in the design of associative containers and priority queues follow.

Associative containers depend on their composite policies to a very large extent. Implicitly hard-wiring policies can hamper their performance and limit their functionality. An efficient hash-based container, for example, requires policies for testing key equivalence, hashing keys, translating hash values into positions within the hash table, and determining when and how to resize the table internally. A tree-based container can efficiently support order statistics, i.e. the ability to query what is the order of each key within the sequence of keys in the container, but only if the container is supplied with a policy to internally update meta-data. There are many other such examples.

Ideally, all associative containers would share the same interface. Unfortunately, underlying data structures and mapping semantics differentiate between different containers. For example, suppose one writes a generic function manipulating an associative container.

template<typename Cntnr>

void

some_op_sequence(Cntnr& r_cnt)

{

...

}

Given this, then what can one assume about the instantiating

container? The answer varies according to its underlying data

structure. If the underlying data structure of

Cntnr is based on a tree or trie, then the order

of elements is well defined; otherwise, it is not, in general. If

the underlying data structure of Cntnr is based

on a collision-chaining hash table, then modifying

r_Cntnr will not invalidate its iterators' order;

if the underlying data structure is a probing hash table, then this

is not the case. If the underlying data structure is based on a tree

or trie, then a reference to the container can efficiently be split;

otherwise, it cannot, in general. If the underlying data structure

is a red-black tree, then splitting a reference to the container is

exception-free; if it is an ordered-vector tree, exceptions can be

thrown.

Priority queues are useful when one needs to efficiently access a minimum (or maximum) value as the set of values changes.

Most useful data structures for priority queues have a relatively simple structure, as they are geared toward relatively simple requirements. Unfortunately, these structures do not support access to an arbitrary value, which turns out to be necessary in many algorithms. Say, decreasing an arbitrary value in a graph algorithm. Therefore, some extra mechanism is necessary and must be invented for accessing arbitrary values. There are at least two alternatives: embedding an associative container in a priority queue, or allowing cross-referencing through iterators. The first solution adds significant overhead; the second solution requires a precise definition of iterator invalidation. Which is the next point...

Priority queues, like hash-based containers, store values in an

order that is meaningless and undefined externally. For example, a

push operation can internally reorganize the

values. Because of this characteristic, describing a priority

queues' iterator is difficult: on one hand, the values to which

iterators point can remain valid, but on the other, the logical

order of iterators can change unpredictably.

Roughly speaking, any element that is both inserted to a priority

queue (e.g. through push) and removed

from it (e.g., through pop), incurs a

logarithmic overhead (in the amortized sense). Different underlying

data structures place the actual cost differently: some are

optimized for amortized complexity, whereas others guarantee that

specific operations only have a constant cost. One underlying data

structure might be chosen if modifying a value is frequent

(Dijkstra's shortest-path algorithm), whereas a different one might

be chosen otherwise. Unfortunately, an array-based binary heap - an

underlying data structure that optimizes (in the amortized sense)

push and pop operations, differs from the

others in terms of its invalidation guarantees. Other design

decisions also impact the cost and placement of the overhead, at the

expense of more difference in the kinds of operations that the

underlying data structure can support. These differences pose a

challenge when creating a uniform interface for priority queues.

Many fine associative-container libraries were already written, most notably, the C++ standard's associative containers. Why then write another library? This section shows some possible advantages of this library, when considering the challenges in the introduction. Many of these points stem from the fact that the ISO C++ process introduced associative-containers in a two-step process (first standardizing tree-based containers, only then adding hash-based containers, which are fundamentally different), did not standardize priority queues as containers, and (in our opinion) overloads the iterator concept.

Associative containers require a relatively large number of policies to function efficiently in various settings. In some cases this is needed for making their common operations more efficient, and in other cases this allows them to support a larger set of operations

Hash-based containers, for example, support look-up and insertion methods (

findandinsert). In order to locate elements quickly, they are supplied a hash functor, which instruct how to transform a key object into some size type; a hash functor might transform"hello"into1123002298. A hash table, though, requires transforming each key object into some size-type type in some specific domain; a hash table with a 128-long table might transform"hello"into position63. The policy by which the hash value is transformed into a position within the table can dramatically affect performance. Hash-based containers also do not resize naturally (as opposed to tree-based containers, for example). The appropriate resize policy is unfortunately intertwined with the policy that transforms hash value into a position within the table.Tree-based containers, for example, also support look-up and insertion methods, and are primarily useful when maintaining order between elements is important. In some cases, though, one can utilize their balancing algorithms for completely different purposes.

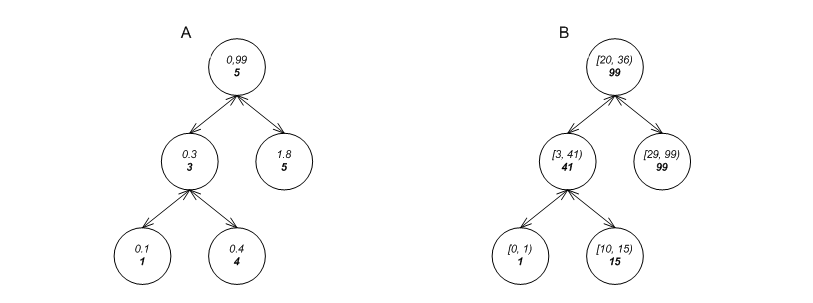

Figure A shows a tree whose each node contains two entries: a floating-point key, and some size-type metadata (in bold beneath it) that is the number of nodes in the sub-tree. (The root has key 0.99, and has 5 nodes (including itself) in its sub-tree.) A container based on this data structure can obviously answer efficiently whether 0.3 is in the container object, but it can also answer what is the order of 0.3 among all those in the container object: see [biblio.clrs2001].

As another example, Figure B shows a tree whose each node contains two entries: a half-open geometric line interval, and a number metadata (in bold beneath it) that is the largest endpoint of all intervals in its sub-tree. (The root describes the interval

[20, 36), and the largest endpoint in its sub-tree is 99.) A container based on this data structure can obviously answer efficiently whether[3, 41)is in the container object, but it can also answer efficiently whether the container object has intervals that intersect[3, 41). These types of queries are very useful in geometric algorithms and lease-management algorithms.It is important to note, however, that as the trees are modified, their internal structure changes. To maintain these invariants, one must supply some policy that is aware of these changes. Without this, it would be better to use a linked list (in itself very efficient for these purposes).

The standard C++ library contains associative containers based on red-black trees and collision-chaining hash tables. These are very useful, but they are not ideal for all types of settings.

The figure below shows the different underlying data structures currently supported in this library.

A shows a collision-chaining hash-table, B shows a probing hash-table, C shows a red-black tree, D shows a splay tree, E shows a tree based on an ordered vector(implicit in the order of the elements), F shows a PATRICIA trie, and G shows a list-based container with update policies.

Each of these data structures has some performance benefits, in terms of speed, size or both. For now, note that vector-based trees and probing hash tables manipulate memory more efficiently than red-black trees and collision-chaining hash tables, and that list-based associative containers are very useful for constructing "multimaps".

Now consider a function manipulating a generic associative container,

template<class Cntnr>

int

some_op_sequence(Cntnr &r_cnt)

{

...

}

Ideally, the underlying data structure

of Cntnr would not affect what can be

done with r_cnt. Unfortunately, this is not

the case.

For example, if Cntnr

is std::map, then the function can

use

std::for_each(r_cnt.find(foo), r_cnt.find(bar), foobar)

in order to apply foobar to all

elements between foo and

bar. If

Cntnr is a hash-based container,

then this call's results are undefined.

Also, if Cntnr is tree-based, the type

and object of the comparison functor can be

accessed. If Cntnr is hash based, these

queries are nonsensical.

There are various other differences based on the container's underlying data structure. For one, they can be constructed by, and queried for, different policies. Furthermore:

Containers based on C, D, E and F store elements in a meaningful order; the others store elements in a meaningless (and probably time-varying) order. By implication, only containers based on C, D, E and F can support

eraseoperations taking an iterator and returning an iterator to the following element without performance loss.Containers based on C, D, E, and F can be split and joined efficiently, while the others cannot. Containers based on C and D, furthermore, can guarantee that this is exception-free; containers based on E cannot guarantee this.

Containers based on all but E can guarantee that erasing an element is exception free; containers based on E cannot guarantee this. Containers based on all but B and E can guarantee that modifying an object of their type does not invalidate iterators or references to their elements, while containers based on B and E cannot. Containers based on C, D, and E can furthermore make a stronger guarantee, namely that modifying an object of their type does not affect the order of iterators.

A unified tag and traits system (as used for the C++ standard library iterators, for example) can ease generic manipulation of associative containers based on different underlying data structures.

Iterators are centric to the design of the standard library

containers, because of the container/algorithm/iterator

decomposition that allows an algorithm to operate on a range

through iterators of some sequence. Iterators, then, are useful

because they allow going over a

specific sequence. The standard library

also uses iterators for accessing a

specific element: when an associative

container returns one through find. The

standard library consistently uses the same types of iterators

for both purposes: going over a range, and accessing a specific

found element. Before the introduction of hash-based containers

to the standard library, this made sense (with the exception of

priority queues, which are discussed later).

Using the standard associative containers together with non-order-preserving associative containers (and also because of priority-queues container), there is a possible need for different types of iterators for self-organizing containers: the iterator concept seems overloaded to mean two different things (in some cases). XXX "ds_gen.html#find_range">Design::Associative Containers::Data-Structure Genericity::Point-Type and Range-Type Methods.

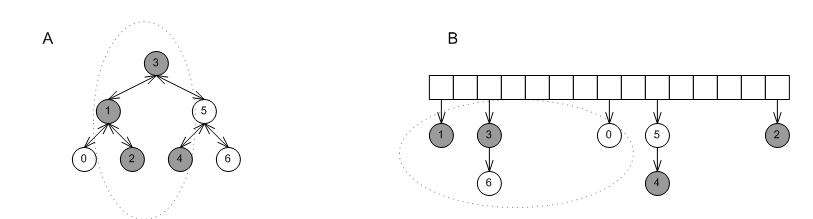

Suppose cntnr is some associative

container, and say c is an object of

type cntnr. Then what will be the outcome

of

std::for_each(c.find(1), c.find(5), foo);

If cntnr is a tree-based container

object, then an in-order walk will

apply foo to the relevant elements,

as in the graphic below, label A. If c is

a hash-based container, then the order of elements between any

two elements is undefined (and probably time-varying); there is

no guarantee that the elements traversed will coincide with the

logical elements between 1 and 5, as in

label B.

In our opinion, this problem is not caused just because

red-black trees are order preserving while

collision-chaining hash tables are (generally) not - it

is more fundamental. Most of the standard's containers

order sequences in a well-defined manner that is

determined by their interface:

calling insert on a tree-based

container modifies its sequence in a predictable way, as

does calling push_back on a list or

a vector. Conversely, collision-chaining hash tables,

probing hash tables, priority queues, and list-based

containers (which are very useful for "multimaps") are

self-organizing data structures; the effect of each

operation modifies their sequences in a manner that is

(practically) determined by their

implementation.

Consequently, applying an algorithm to a sequence obtained from most containers may or may not make sense, but applying it to a sub-sequence of a self-organizing container does not.

Suppose c is some collision-chaining

hash-based container object, and one calls

c.find(3)

Then what composes the returned iterator?

In the graphic below, label A shows the simplest (and

most efficient) implementation of a collision-chaining

hash table. The little box marked

point_iterator shows an object

that contains a pointer to the element's node. Note that

this "iterator" has no way to move to the next element (

it cannot support

operator++). Conversely, the little

box marked iterator stores both a

pointer to the element, as well as some other

information (the bucket number of the element). the

second iterator, then, is "heavier" than the first one-

it requires more time and space. If we were to use a

different container to cross-reference into this

hash-table using these iterators - it would take much

more space. As noted above, nothing much can be done by

incrementing these iterators, so why is this extra

information needed?

Alternatively, one might create a collision-chaining hash-table where the lists might be linked, forming a monolithic total-element list, as in the graphic below, label B. Here the iterators are as light as can be, but the hash-table's operations are more complicated.

It should be noted that containers based on collision-chaining hash-tables are not the only ones with this type of behavior; many other self-organizing data structures display it as well.

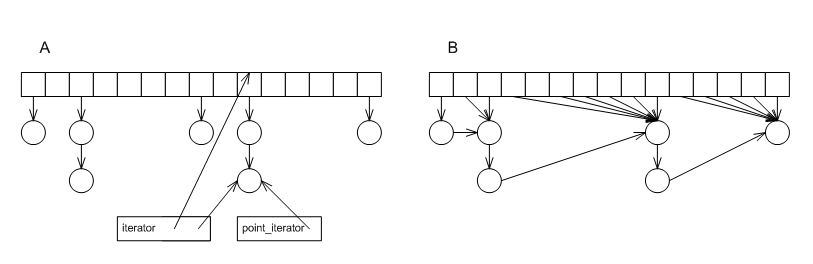

Consider the following snippet:

it = c.find(3); c.erase(5);

Following the call to erase, what is the

validity of it: can it be de-referenced?

can it be incremented?

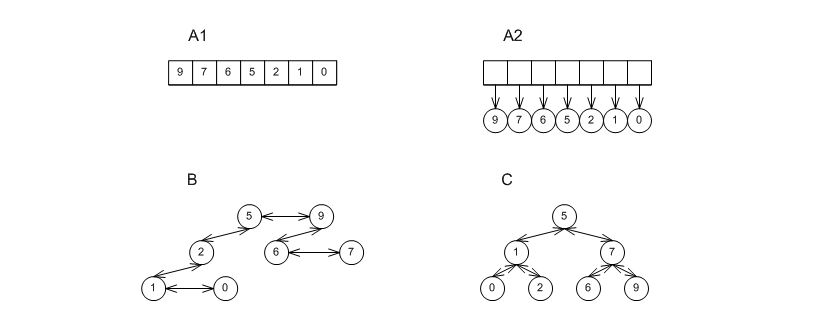

The answer depends on the underlying data structure of the container. The graphic below shows three cases: A1 and A2 show a red-black tree; B1 and B2 show a probing hash-table; C1 and C2 show a collision-chaining hash table.

Erasing 5 from A1 yields A2. Clearly, an iterator to 3 can be de-referenced and incremented. The sequence of iterators changed, but in a way that is well-defined by the interface.

Erasing 5 from B1 yields B2. Clearly, an iterator to 3 is not valid at all - it cannot be de-referenced or incremented; the order of iterators changed in a way that is (practically) determined by the implementation and not by the interface.

Erasing 5 from C1 yields C2. Here the situation is more complicated. On the one hand, there is no problem in de-referencing

it. On the other hand, the order of iterators changed in a way that is (practically) determined by the implementation and not by the interface.

So in the standard library containers, it is not always possible

to express whether it is valid or not. This

is true also for insert. Again, the

iterator concept seems overloaded.

The design of the functional overlay to the underlying data structures differs slightly from some of the conventions used in the C++ standard. A strict public interface of methods that comprise only operations which depend on the class's internal structure; other operations are best designed as external functions. (See [biblio.meyers02both]).With this rubric, the standard associative containers lack some useful methods, and provide other methods which would be better removed.

Order-preserving standard associative containers provide the method

iterator erase(iterator it)

which takes an iterator, erases the corresponding element, and returns an iterator to the following element. Also standardd hash-based associative containers provide this method. This seemingly increasesgenericity between associative containers, since it is possible to use

typename C::iterator it = c.begin(); typename C::iterator e_it = c.end(); while(it != e_it) it = pred(*it)? c.erase(it) : ++it;

in order to erase from a container object

call element which match a predicatepred. However, in a different sense this actually decreases genericity: an integral implication of this method is that tree-based associative containers' memory use is linear in the total number of elements they store, while hash-based containers' memory use is unbounded in the total number of elements they store. Assume a hash-based container is allowed to decrease its size when an element is erased. Then the elements might be rehashed, which means that there is no "next" element - it is simply undefined. Consequently, it is possible to infer from the fact that the standard library's hash-based containers provide this method that they cannot downsize when elements are erased. As a consequence, different code is needed to manipulate different containers, assuming that memory should be conserved. Therefor, this library's non-order preserving associative containers omit this method.All associative containers include a conditional-erase method

template< class Pred> size_type erase_if (Pred pred)

which erases all elements matching a predicate. This is probably the only way to ensure linear-time multiple-item erase which can actually downsize a container.

The standard associative containers provide methods for multiple-item erase of the form

size_type erase(It b, It e)

erasing a range of elements given by a pair of iterators. For tree-based or trie-based containers, this can implemented more efficiently as a (small) sequence of split and join operations. For other, unordered, containers, this method isn't much better than an external loop. Moreover, if

cis a hash-based container, thenc.erase(c.find(2), c.find(5))

is almost certain to do something different than erasing all elements whose keys are between 2 and 5, and is likely to produce other undefined behavior.

It is well-known that tree-based and trie-based container objects can be efficiently split or joined (See [biblio.clrs2001]). Externally splitting or joining trees is super-linear, and, furthermore, can throw exceptions. Split and join methods, consequently, seem good choices for tree-based container methods, especially, since as noted just before, they are efficient replacements for erasing sub-sequences.

The standard associative containers provide methods of the form

template<class It> size_type insert(It b, It e);

for inserting a range of elements given by a pair of iterators. At best, this can be implemented as an external loop, or, even more efficiently, as a join operation (for the case of tree-based or trie-based containers). Moreover, these methods seem similar to constructors taking a range given by a pair of iterators; the constructors, however, are transactional, whereas the insert methods are not; this is possibly confusing.

Associative containers are parametrized by policies allowing to

test key equivalence: a hash-based container can do this through

its equivalence functor, and a tree-based container can do this

through its comparison functor. In addition, some standard

associative containers have global function operators, like

operator== and operator<=,

that allow comparing entire associative containers.

In our opinion, these functions are better left out. To begin

with, they do not significantly improve over an external

loop. More importantly, however, they are possibly misleading -

operator==, for example, usually checks for

equivalence, or interchangeability, but the associative

container cannot check for values' equivalence, only keys'

equivalence; also, are two containers considered equivalent if

they store the same values in different order? this is an

arbitrary decision.

Priority queues are containers that allow efficiently inserting

values and accessing the maximal value (in the sense of the

container's comparison functor). Their interface

supports push

and pop. The standard

container std::priorityqueue indeed support

these methods, but little else. For algorithmic and

software-engineering purposes, other methods are needed:

Many graph algorithms (see [biblio.clrs2001]) require increasing a value in a priority queue (again, in the sense of the container's comparison functor), or joining two priority-queue objects.

The return type of

priority_queue'spushmethod is a point-type iterator, which can be used for modifying or erasing arbitrary values. For example:priority_queue<int> p; priority_queue<int>::point_iterator it = p.push(3); p.modify(it, 4);

These types of cross-referencing operations are necessary for making priority queues useful for different applications, especially graph applications.

It is sometimes necessary to erase an arbitrary value in a priority queue. For example, consider the

selectfunction for monitoring file descriptors:int select(int nfds, fd_set *readfds, fd_set *writefds, fd_set *errorfds, struct timeval *timeout);

then, as the select documentation states:

“ The nfds argument specifies the range of file descriptors to be tested. The select() function tests file descriptors in the range of 0 to nfds-1.”

It stands to reason, therefore, that we might wish to maintain a minimal value for

nfds, and priority queues immediately come to mind. Note, though, that when a socket is closed, the minimal file description might change; in the absence of an efficient means to erase an arbitrary value from a priority queue, we might as well avoid its use altogether.The standard containers typically support iterators. It is somewhat unusual for

std::priority_queueto omit them (See [biblio.meyers01stl]). One might ask why do priority queues need to support iterators, since they are self-organizing containers with a different purpose than abstracting sequences. There are several reasons:Iterators (even in self-organizing containers) are useful for many purposes: cross-referencing containers, serialization, and debugging code that uses these containers.

The standard library's hash-based containers support iterators, even though they too are self-organizing containers with a different purpose than abstracting sequences.

In standard-library-like containers, it is natural to specify the interface of operations for modifying a value or erasing a value (discussed previously) in terms of a iterators. It should be noted that the standard containers also use iterators for accessing and manipulating a specific value. In hash-based containers, one checks the existence of a key by comparing the iterator returned by

findto the iterator returned byend, and not by comparing a pointer returned byfindto NULL.

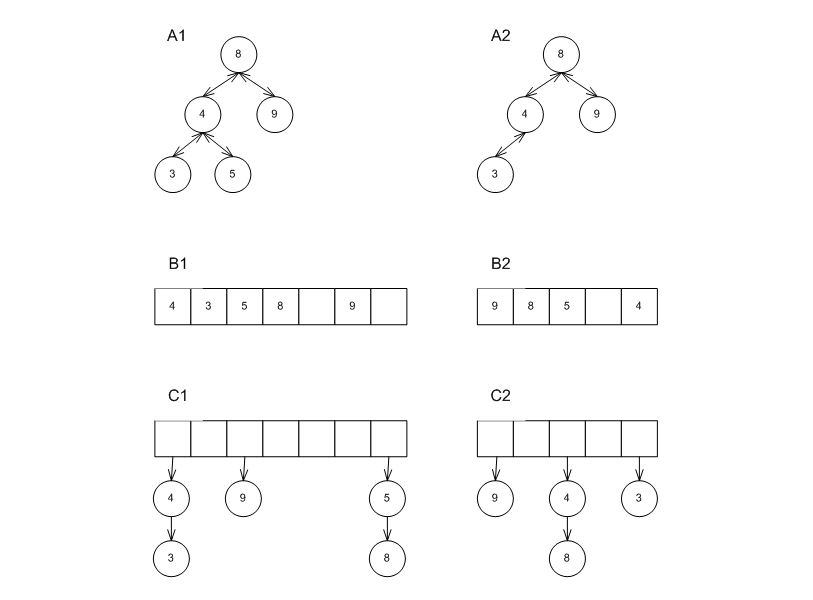

There are three main implementations of priority queues: the first employs a binary heap, typically one which uses a sequence; the second uses a tree (or forest of trees), which is typically less structured than an associative container's tree; the third simply uses an associative container. These are shown in the figure below with labels A1 and A2, B, and C.

No single implementation can completely replace any of the

others. Some have better push

and pop amortized performance, some have

better bounded (worst case) response time than others, some

optimize a single method at the expense of others, etc. In

general the "best" implementation is dictated by the specific

problem.

As with associative containers, the more implementations co-exist, the more necessary a traits mechanism is for handling generic containers safely and efficiently. This is especially important for priority queues, since the invalidation guarantees of one of the most useful data structures - binary heaps - is markedly different than those of most of the others.

Binary heaps are one of the most useful underlying

data structures for priority queues. They are very efficient in

terms of memory (since they don't require per-value structure

metadata), and have the best amortized push and

pop performance for primitive types like

int.

The standard library's priority_queue

implements this data structure as an adapter over a sequence,

typically

std::vector

or std::deque, which correspond to labels

A1 and A2 respectively in the graphic above.

This is indeed an elegant example of the adapter concept and the algorithm/container/iterator decomposition. (See [biblio.nelson96stlpq]). There are several reasons why a binary-heap priority queue may be better implemented as a container instead of a sequence adapter:

std::priority_queuecannot erase values from its adapted sequence (irrespective of the sequence type). This means that the memory use of anstd::priority_queueobject is always proportional to the maximal number of values it ever contained, and not to the number of values that it currently contains. (Seeperformance/priority_queue_text_pop_mem_usage.cc.) This implementation of binary heaps acts very differently than other underlying data structures (See also pairing heaps).Some combinations of adapted sequences and value types are very inefficient or just don't make sense. If one uses

std::priority_queue<std::vector<std::string> > >, for example, then not only will each operation perform a logarithmic number ofstd::stringassignments, but, furthermore, any operation (includingpop) can render the container useless due to exceptions. Conversely, if one usesstd::priority_queue<std::deque<int> > >, then each operation uses incurs a logarithmic number of indirect accesses (through pointers) unnecessarily. It might be better to let the container make a conservative deduction whether to use the structure in the graphic above, labels A1 or A2.There does not seem to be a systematic way to determine what exactly can be done with the priority queue.

If

pis a priority queue adapting anstd::vector, then it is possible to iterate over all values by using&p.top()and&p.top() + p.size(), but this will not work ifpis adapting anstd::deque; in any case, one cannot usep.begin()andp.end(). If a different sequence is adapted, it is even more difficult to determine what can be done.If

pis a priority queue adapting anstd::deque, then the reference return byp.top()

will remain valid until it is popped, but if

padapts anstd::vector, the nextpushwill invalidate it. If a different sequence is adapted, it is even more difficult to determine what can be done.

Sequence-based binary heaps can still implement linear-time

eraseandmodifyoperations. This means that if one needs to erase a small (say logarithmic) number of values, then one might still choose this underlying data structure. Usingstd::priority_queue, however, this will generally change the order of growth of the entire sequence of operations.

[biblio.abrahams97exception] STL Exception Handling Contract . 1997. ISO SC22/WG21 .

[biblio.alexandrescu01modern] Modern C++ Design: Generic Programming and Design Patterns Applied . 2001 . Addison-Wesley Publishing Company .

[biblio.andrew04mtf] MTF, Bit, and COMB: A Guide to Deterministic and Randomized Algorithms for the List Update Problem .

[biblio.austern00noset] Why You Shouldn't Use set - and What You Should Use Instead . April, 2000 . C++ Report .

[biblio.austern01htprop] A Proposal to Add Hashtables to the Standard Library . 2001 . ISO SC22/WG21 .

[biblio.austern98segmentedit] Segmented iterators and hierarchical algorithms . April, 1998 . Generic Programming .

[biblio.dawestimer] Boost Timer Library . Boost .

[biblio.clearypool] Boost Pool Library . Boost .

[biblio.maddocktraits] Boost Type Traits Library . Boost .

[biblio.brodal96priority] Worst-case efficient priority queues .

[biblio.bulkamayheweff] Efficient C++ Programming Techniques . 1997 . Addison-Wesley Publishing Company .

[biblio.dubhashi98neg] Balls and bins: A study in negative dependence . 1998 . Random Structures and Algorithms 13 .

[biblio.fagin79extendible] Extendible hashing - a fast access method for dynamic files . 1979 . ACM Trans. Database Syst. 4 .

[biblio.filliatre2000ptset] Ptset: Sets of integers implemented as Patricia trees . 2000 .

[biblio.fredman86pairing] The pairing heap: a new form of self-adjusting heap . 1986 .

[biblio.gof] Design Patterns - Elements of Reusable Object-Oriented Software . 1995 . Addison-Wesley Publishing Company .

[biblio.jossutis01stl] The C++ Standard Library - A Tutorial and Reference . 2001 . Addison-Wesley Publishing Company .

[biblio.kt99fat_heaps] New Heap Data Structures . 1999 .

[biblio.knuth98sorting] The Art of Computer Programming - Sorting and Searching . 1998 . Addison-Wesley Publishing Company .

[biblio.litwin80lh] Linear hashing: A new tool for file and table addressing . June 1980 . Proceedings of International Conference on Very Large Data Bases .

[biblio.meyers96more] More Effective C++: 35 New Ways to Improve Your Programs and Designs . 1996 . Addison-Wesley Publishing Company .

[biblio.meyers00nonmember] How Non-Member Functions Improve Encapsulation . 2000 . C/C++ Users Journal .

[biblio.meyers01stl] Effective STL: 50 Specific Ways to Improve Your Use of the Standard Template Library . 2001 . Addison-Wesley Publishing Company .

[biblio.mscom] COM: Component Model Object Technologies . Microsoft .

[biblio.musser95rationale] Rationale for Adding Hash Tables to the C++ Standard Template Library . 1995 .

[biblio.musser96stltutorial] STL Tutorial and Reference Guide . 1996 . Addison-Wesley Publishing Company .

[biblio.nelson96stlpq] Priority Queues and the STL . January 1996 . Dr. Dobbs Journal .

[biblio.sgi_stl] Standard Template Library Programmer's Guide . SGI .

[biblio.select_man] select .

[biblio.sleator84amortized] Amortized Efficiency of List Update Problems . 1984 . ACM Symposium on Theory of Computing .

[biblio.sleator85self] Self-Adjusting Binary Search Trees . 1985 . ACM Symposium on Theory of Computing .